Hopsworks is a modular platform for building and operating AI systems at scale. In this post, we introduce Hopsworks’ AAA (authentication, authorization, audit) security model. We describe how Hopsworks’ project-based multi-tenant security model implements dynamic role-based access control with zero performance overhead. We show Hopsworks’ self-service support for securely sharing data across project boundaries.

Hopsworks is an AI platform for developing and running batch, real-time and LLM AI systems at scale. Hopsworks includes a feature store (Lakehouse storage for historical data, and a real-time database (RonDB) for online feature serving), model serving with KServe, and many other services needed to support and run your feature, training, and inference pipelines. In Figure 1, you can see how Hopsworks uses connectors to access data sources and provides access to external services via API keys and single sign on (SSO) with Active Directory (AD) or OAuth2, and AuthZ with LDAP roles or OAuth2 claims.

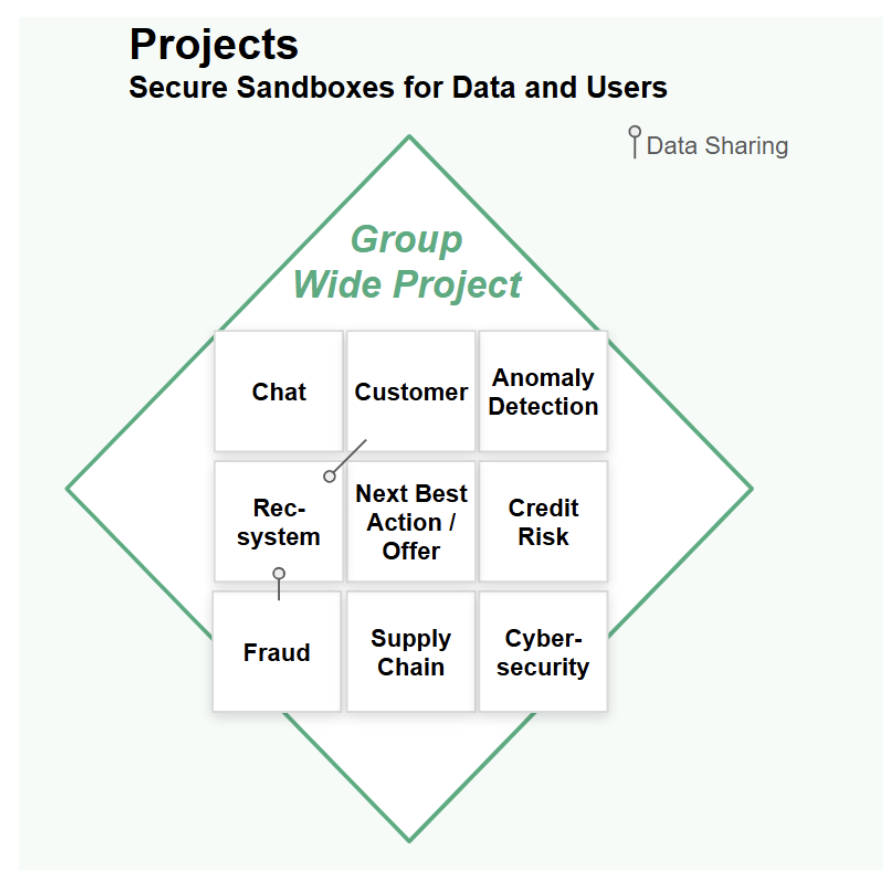

This post is concerned primarily with Hopsworks’ internal security model that enables you to host sensitive data in a shared cluster, providing powerful dynamic role-based access control and self-service capabilities. The benefit of Hopsworks project-based multi-tenancy model is that you can organize users and data around projects, and all activity within a project is localized to that project. That is, you don’t have to consider what privileges a user already has when you give a user access to sensitive or non-sensitive data through a project - data will not be leaked from the project. The result is that you can manage hundreds or thousands of sensitive datasets around teams in a single multi-tenant cluster - you do not need to manage and pay for separate clusters.

Role-based access control (RBAC) is a well-known security model that enables administrators to give a group of users the same access rights to selected resources. With roles, an administrator at a company could define a single security policy and apply it to all members of a department. But individuals may be members of multiple departments, so a user might be given multiple roles. Before you give a user access to a sensitive dataset, you probably have to consider what other privileges the user has, in case the user could cross link the sensitive dataset with other data sources or copy the data to other lower security level datasets.

Dynamic role-based access control means that, based on some policy, you can change the set of roles a user can hold at a given time. For example, if a user has two different roles - one for accessing banking data and another one for accessing trading data, with dynamic RBAC, you could restrict the user to only allow her to hold one of those roles at a given time. The policy for deciding which role the user holds could, for example, depend on what VPN (virtual private network) the user is logged in to or what building the user is present in. In effect, dynamic roles would allow her to hold only one of the roles at a time and sandbox her inside one of the domains - banking or trading. It would prevent her from cross-linking or copying data between the different trading and banking domains. That means you would not have to consider existing privileges for users when giving them access to sensitive data.

Hopsworks implements a dynamic role-based access control model through a project-based multi-tenant security model. Inspired by GDPR, in Hopsworks a Project is a sandboxed set of users, data, and programs. Every Project has at least one data owner with full read-write privileges and zero or more other members, who can have either read-write privileges or read-only privileges.

A project owner may add users to his/her project as either a Data Scientist role (read-only privileges and run jobs privileges) or Data Owner role (full read/write/membership privileges). Users can be members of (or own) multiple Projects, see Figure 3 but inside each project, each member (user) has a unique identity that is called a project-user identity.

For example, user Alice in Project A is different from user Alice in Project B, see Figure 4. In fact, Hopsworks creates two separate identities: ProjectA__Alice and ProjectB__Alice, respectively. As such, each project-user identity is effectively a role with the project-level privileges to access data and run programs inside that project. If a user is a member of multiple projects, she has, in effect, multiple possible roles, but only one role can be active at a time when performing an action inside Hopsworks. When a user performs an action (for example, runs a Job or deploys a model) it will be executed with the project-user identity. That is, the action will only have the privileges associated with that project. You can only run jobs or access data with your project-user identity. Hopsworks also supports project-level service accounts to run jobs, workflows, and deployments.

In Figure 4, you can see how Alice has a different identity for each of the two projects (A and B) that she is a member of. Each project contains its own separate private resources / assets. When Alice performs an action inside a project or runs a command/job, Hopsworks will perform that action with Alice’s project-specific user identity. This means that the Ray job that Alice started in project A can only access assets within project A, not assets in project B.

Hopsworks implements the project-user identity using a X.509 certificate issued internally by Hopsworks for every project-user identity. Platform services inside Hopsworks, including HopsFS and Kafka, are authorized and authenticated using the X.509 certificate. Note that Hopsworks also supports external clients (ML pipelines, etc) using API keys for services and JSON Web Tokens (JWT) for HTML clients. API keys provide scopes to restrict the use of different services by an API key and help you implement the principle of least privilege for external access, see Figure 5. For data that leaves Hopsworks, it naturally cannot prevent cross-linking of data across projects.

Within a project in Hopsworks, users can have one of two main roles: a reader/writer (data owner) role that can read/write to all services within the project, and a reader role (called a data scientist), who can only read from project services, apart from the model registry, where a user can write a new version of a model, but not update versions of models created by other users. In Figure 6, you can see that Alice is a Data Scientist in Project A and her “Write Job” (a job that wants to write to project services) cannot write to most of the services. In Project B, Alice is a Data Owner and her “Write Job” has privileges to write to all project services.

When a user authenticates with Hopsworks, they are logged into the platform with a Hopsworks user identity. This user identity is needed to be able to construct the project-user identity - it is the “user” part of the project-user identity. In Hopsworks, a user-identity is mapped to a global Hopsworks role (independent of project membership) - a regular user or an administrator. A regular user can search for assets, update her profile, generate API keys, and be a member of different projects. Users can be allowed to create projects up to a configurable quota. Administrators have access to user, project, storage, and application management pages, system monitoring and maintenance services. They can activate or block users, delete Projects, manage Project/GPU quotas and priorities, promote normal users to administrators, and so on. It’s important to mention here that a Hopsworks administrator cannot view the data inside a project - even if they are allowed to delete a project. All users authenticate with SSO, typically an external AD or OAuth-2 service. Self-managed authentication with email/password is also supported, mainly for test deployments.

A user interacts with Hopsworks through the web application or REST APIs and they don’t necessarily realize that the web application is a facade to a modular distributed system. In the background we run, our POSIX-compliant distributed filesystem, our cluster management and scheduler, a distributed in-memory database RonDB, OpenSearch and others.

A fundamental principle in every distributed system is that processes exchange messages over the network or through shared state (such as a filesystem or database). When communication is performed by message passing, it is imperative that we protect the messages from adversaries reading or modifying their content and validate the identity of the caller. The security model of our core systems use X.509 certificates. We use a self-managed Public Key Infrastructure (PKI) with X.509 certificates to authenticate and authorize users. Certificates enabled us to also use the well established TLS protocol to provide confidentiality and data integrity. Every project-level user and every service in a Hopsworks cluster has a private key and an X.509 certificate.

Hopsworks supports a number of stateful and compute services that use X.509 certificates to authenticate users, applications, and services: HopsFS, Hive Metastore, and Kafka. These services all provide their own authorization schemes. In HopsFS, ACLs (access control lists) authorize file system operations. HopsFS ACLs can also be customized directly in Hopsworks. Jobs, notebooks, and deployments in Hopsworks are provisioned with the private key and X.509 certificate of the user (or service account) that launched the job/notebook/deployment.

Hopsworks projects also support multi-tenant services that are not currently backed by X.509 certificates: OpenSearch and the MySQL API to RonDB. Hopsworks uses JSON Web Tokens (JWT) for authentication/authorization in OpenSearch. The JWT encodes both the project name and username, enabling OpenSearch to authorize access to indices - each project has a well-known OpenSearch index. RonDB has a MySQL API that requires user credentials for access.

Most multi-tenant services in Hopsworks are built on X.509 certificates. Hopsworks comes with its own root Certificate Authority (CA) for signing certificates internally. Hopsworks root CA does not directly sign requests, instead it uses an intermediate CA to do so. To protect against a security breach, more than one intermediate CA can be made responsible for a specific domain. As shown in the above figure, there is one intermediate CA for creating service certificates and project-specific user certificates. Hopsworks intermediate CA supports two kinds of certificates: User and Service certificates. They are all signed by the same Hopsworks intermediate CA but have different lifespan and attributes. In the following sections we are going to dig deeper on how they are issued, their lifecycle and how they’re used.

When a user in Hopsworks becomes a member of a project (either when they create their own project or are added as a member to a project), a new user is created behind the scenes. The username of this project-user is in the form of PROJECTNAME__USERNAME.

For each project-user, we automatically generate an X.509 certificate and a private key. The certificate contains the user’s username to the Common Name field of the X.509 Subject to authenticate the user.. The user certificate and its corresponding private key are stored in the database. The private key is protected with a passphrase which is encrypted with a master key before storing it also in the database. The cryptographic material is automatically provisioned which makes the authentication and authorization process transparent to the user.

Hopsworks’ multi-tenant services also support X.509 certificates. Services will communicate with each other using their own certificate to authenticate and encrypt all traffic. Each service in Hopsworks, that supports TLS encryption and/or authentication, has its own service-specific certificate. Service certificates encode the service discovery domain names they are issued for and the login name of the system user who’s supposed to run the process. They are generated when a user creates a Hopsworks cluster. Service certificates can be rotated automatically in configurable intervals or upon request of the administrator. The services in Hopsworks that have their own X.509 certificates for encrypting their network traffic include:

The services in Hopsworks that support two-way X.509 certificates for both encrypting their network traffic and client-side authentication are:

So, now that we’ve outlined the building blocks for our security model, we will see how we actually protect all data in-transit. All backend services exchange messages with each other. Both servers and clients require all communication to be done over TLS. For 2-way authentication, that is when both entities present a certificate. The server will check if the client’s certificate is still valid and trusted. It will also validate the peer’s certificate against a Certificate Revocation List and if the certificate has been revoked, it will drop the connection. As a last step of protection for project-user and application certificates, the username that is encoded in the message - which is the effective user performing an action - is validated against the Common Name field of the X.509 certificate. That way a rogue user cannot impersonate other users. In server-to-server communication, service certificates are used. Finally, the Locality (L) field of a Service X.509 certificate should also match the username of an incoming RPC.

Hopsworks Certificate Authority keeps a list of revoked certificates. Each time a project is deleted, or a user is removed from a project or a job finishes or when a certificate is rotated, Hopsworks updates its CRL and digitally signs it. All backend services periodically fetch the CRL and refresh their internal data structures so that connections with revoked certificates will be dropped.

Hopsworks stores its files in HopsFS and its backing store is a S3 compatible object store. You need to configure your S3 compatible object store to provide encryption-at-rest. RonDB is a fork of MySQL Cluster and it supports transparent encryption-at-rest.

Hopsworks HTML clients and OpenSearch use JWT for authentication and authorization. Hopsworks issues JWTs to web clients and jobs and they are rotated automatically before they expire and invalidated once the client or job has finished.

Hopsworks provides both a REST API and MySQL API to the online feature store in RonDB. For every online feature store, a new database is created in RonDB to store the online features. The credentials for accessing that database are created by Hopsworks and securely stored in the database, encrypted by a master key. Clients of the online feature store, such as external online applications, use their API key to contact Hopsworks and retrieve their feature store credentials with which they can read data directly from RonDB MySQL API using a JDBC/TLS connection. Clients of RonDB REST API can use their API key for authentication and authorization.

While Hopsworks has its own security model, it needs to integrate with the security models of the environments in which it is used. As mentioned already, Hopsworks supports SSO with identity providers like Kerberos/AD, LDAP, OAuth-2, and Kerberos.

You can also configure authentication rules in external systems. For example, you can configure project membership in Hopsworks using roles in a LDAP server. Hopsworks will synchronize its project membership with the LDAP roles. Also, you can use a claim in OAuth-2 to authenticate use of a model (such as a LLM) deployed in Hopsworks/KServe.

Hopsworks can run on all three major Cloud providers AWS, Azure and GCP. It is greatly discouraged granting access to cloud resources with access keys, so Hopsworks seamlessly integrates with each provider’s own Identity and Access Management system. More information is available on docs.hopsworks.ai.

In Figure 8, you can see that jobs run in Hopsworks can either be given an instance profile (with IAM role privileges to access cloud services) or a federated IAM role (a chained role) with temporary access to cloud services.

IAM roles work great as long as every component resides in the same cloud provider. As soon as you want to integrate with a 3rd party service on another provider or on-premises you will need to use access keys. Having access keys in the code is a prohibitively bad practice as sensitive information might end in a version control system or leak outside the organization. Instead of this bad practice, Hopsworks provides a secret service for securely managing confidential information, such as credentials for external services.

Hopsworks employs Kyverno to enforce certain security policies to Kubernetes resources. Our policies implement common security mechanisms found in enterprise Kubernetes distributions which protect against privilege escalation, data leakage etc. A Kubernetes resource that violates a policy is not allowed to be submitted. For instance, a common privilege escalation vulnerability is for Pods to use hostPath volume mounts to mount the host filesystem which might contain credentials or other Pods data. Kyverno will block any resource to be submitted with such volume.

In this post, we gave an overview of how Hopsworks tackles information security and we introduced our novel project-based multi-tenant security model and the multi-tenant services supported in Hopsworks. Our security model is based primarily on X.509 certificates: Project-User certificates and service certificates. We also described how we address encryption in transit with TLS, as well as the authentication methods to our web front-end based on JWT and API Tokens and integration with third-party services.

Why HopsFS is a great choice as a distributed file system (DFS) in a time when DFS is becoming increasingly indispensable as a central store for training data, logs, model serving, and checkpoints.

In this article, we outline how we leveraged ArrowFlight with DuckDB to build a new service that massively improves the performance of Python clients reading from lakehouse data in the Feature Store